Offline Pretrained TAMP Imitation System

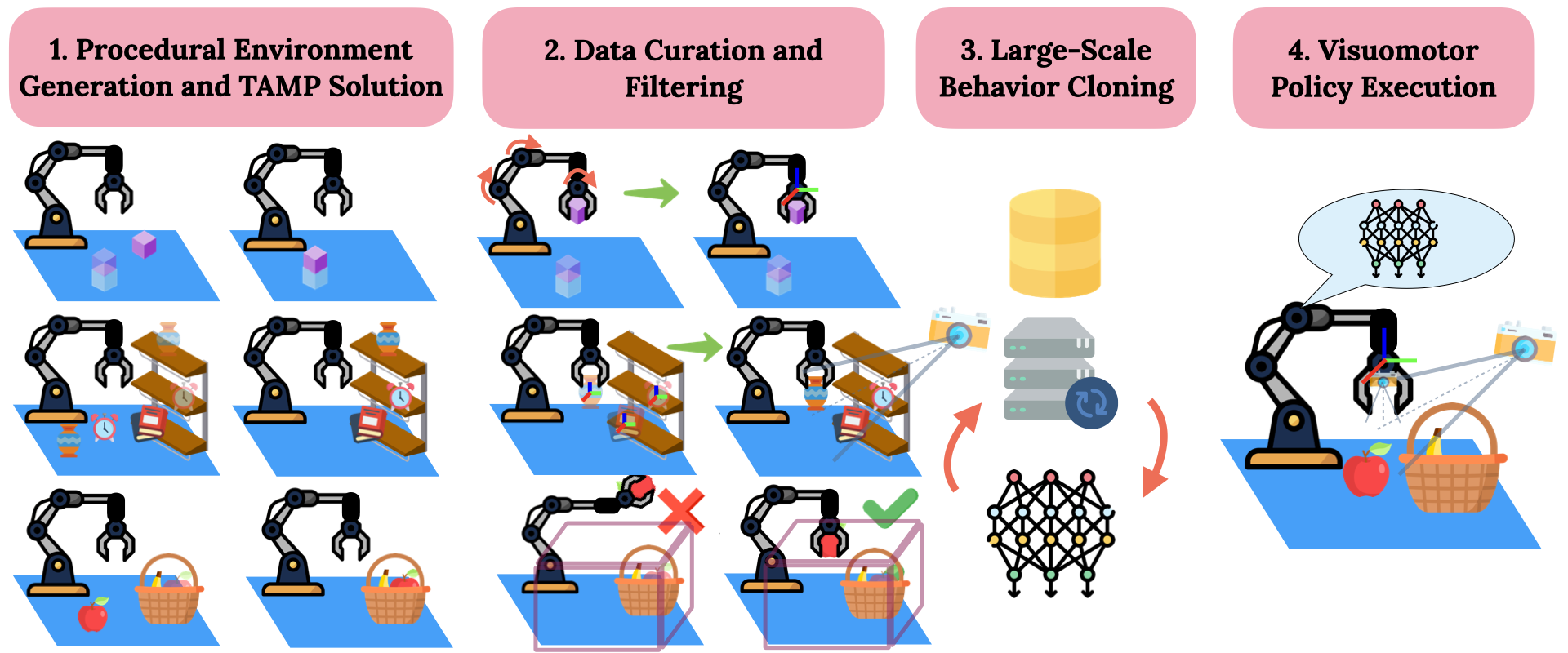

We generate a variety of tasks with differing initial configurations and goals. Next, we transform TAMP joint space demonstrations to task space, go from privileged scene knowledge in TAMP to visual observations and prune TAMP demonstrations based on workspace constraints. Finally, we perform large-scale behavior cloning using a Transformer-based architecture and execute the visuomotor policies.

Optimus enables visuomotor policies to solve manipulation tasks with up to 8 stages

Optimus can solve tasks requiring obstacle awareness and skills beyond pick-and-place.

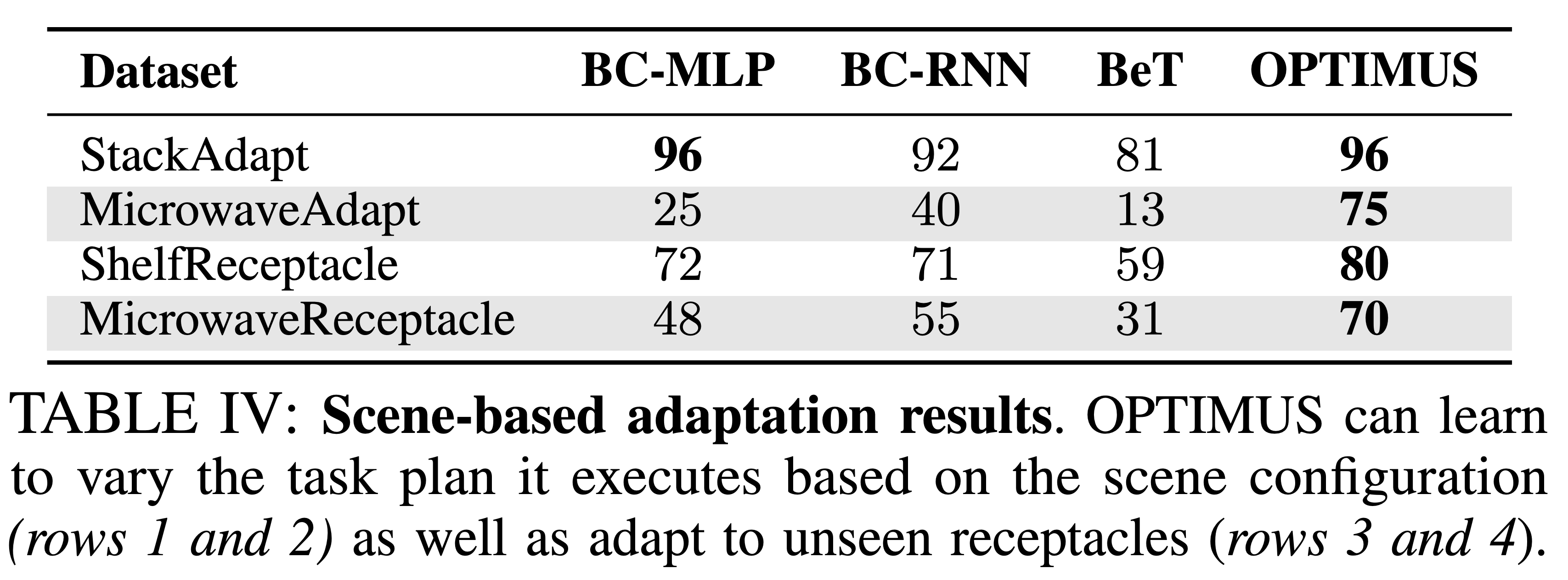

Optimus can distill TAMP's task planning and scene generalization capabilities.

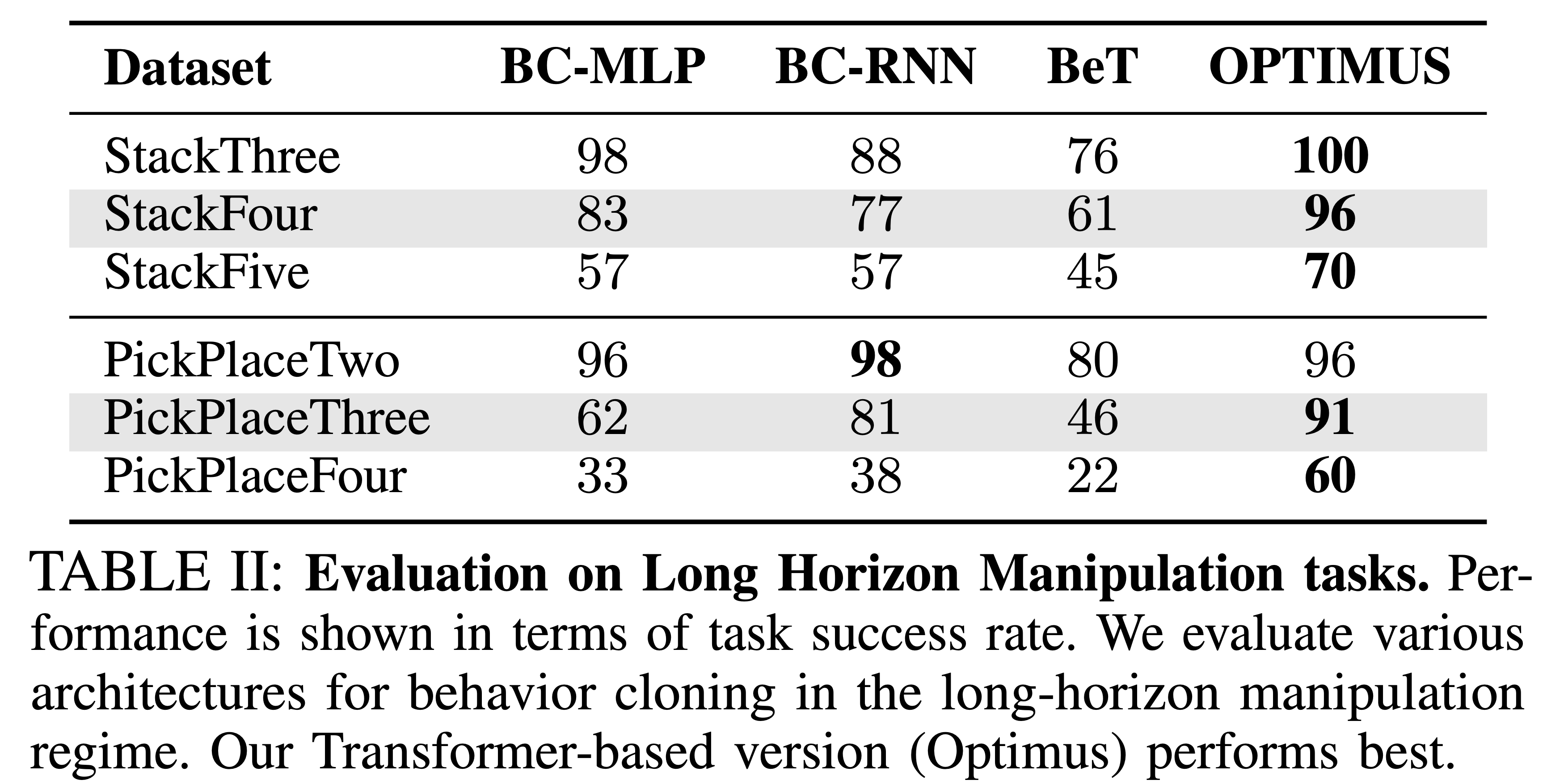

Large Scale Evaluation

Optimus produces TAMP supervised visuomotor policies that can solve over 300 manipulation tasks with up to 72 different objects, achieving success rates of over 70%

BibTeX

@inproceedings{dalal2023optimus,

title={Imitating Task and Motion Planning with Visuomotor Transformers},

author={Dalal, Murtaza and Mandlekar, Ajay and Garrett, Caelan and Handa, Ankur and Salakhutdinov, Ruslan and Fox, Dieter},

journal={Conference on Robot Learning},

year={2023}

}Acknowledgements

We thank Russell Mendonca, Sudeep Dasari, Theophile Gervet and Brandon Trabucco for feedback on early drafts of this paper. This work was supported in part by ONR N000141812861, ONR N000142312368 and DARPA/AFRL FA87502321015. Additionally, MD is supported by the NSF Graduate Fellowship.